Academic research into Task and Motion Planning (TAMP) has proceeded for well over a decade, producing key results in the analysis of, and algorithms for, TAMP scenarios. However, while certain aspects of TAMP research are currently reduced to practice, the overall inroads thus-far remain limited. This workshop aims to bring together academic and industry experts to discuss and reflect on the current state and direction of TAMP research and practice. Academic participants will offer the latest theoretical results and challenges in TAMP, while industry participants will present both successful applications and pragmatic challenges to TAMP adoption. Particularly, we aim to identify and discuss unsolved scientific challenges, and necessary systems developments, to broaden the impact of TAMP research. We hope that this workshop will stimulate insightful discussions and prioritization of efforts in TAMP research to address key practical needs.

This proposed workshop is supported by IEEE RAS Technical Committee on Algorithms for Planning and Control of Robot Motion as confirmed by the Technical Committee co-chairs Tsz-Chiu Au, Aleksandra Faust, Vadim Indelman, Marco Morales, Florian T. Pokorny, Hyejeong Ryu, and Sarah Tang.

This workshop is intended for researchers from academia, industry and government working in AI planning, motion planning, and controls who are interested in improving the autonomy of robots for complex, real-world tasks such as mobile manipulation.

The two main target audiences for the workshop are: (1) members actively researching new methods, future trends and open questions in task and motion planning (2) people who are interested in learning about the current state-of-the-art in order to incorporate these methods into their own projects. We strongly encourage the participation of graduate students.

This workshop follows the previous workshops on varying aspects of Task and Motion Planning in 2016-2020 from the same organizers. Past workshops received excellent participation with approximately 50 attendees, 10 presented posters, and engaging group discussions. During the previous workshops, numerous questions were raised regarding handling uncertainty and operating in real-world environments. This workshop aims to address these needs with specific focus on robust TAMP in practice.

This workshop continues a series of TAMP workshops:

Bio: Barrett Ames is co-founder of BotBuilt, a startup that uses robots, AI, and

computer vision to build houses. BotBuilt software analyzes building plans

and its robots build exact components, enabling housing frames to be

assembled onsite in hours. Dr. Ames completed his PhD at Duke University and

BS in Computer Science at Cornell. He has previously worked at TRACLabs and

Realtime Robotics.

Bio: Barrett Ames is co-founder of BotBuilt, a startup that uses robots, AI, and

computer vision to build houses. BotBuilt software analyzes building plans

and its robots build exact components, enabling housing frames to be

assembled onsite in hours. Dr. Ames completed his PhD at Duke University and

BS in Computer Science at Cornell. He has previously worked at TRACLabs and

Realtime Robotics.

Title: Large Language Models for Solving Long-Horizon Manipulation Problems

Abstract: My long-term research goal is enable real robots to manipulate any kind of object such that they can perform many different tasks in a wide variety of application scenarios such as in our homes, in hospitals, warehouses, or factories. Many of these tasks will require long-horizon reasoning and sequencing of skills to achieve a goal state. In this talk, I will present our work on enabling long-horizon reasoning on real robots for a variety of different long-horizon tasks that can be solved by sequencing a large variety of composable skill primitives. I will specifically focus on the different ways Large Language Models (LLMs) can help with solving these long-horizon tasks. The first part of my talk will be on TidyBot, a robot for personalised household clean-up. One of the key challenges in robotic household cleanup is deciding where each item goes. People's preferences can vary greatly depending on personal taste or cultural background. One person might want shirts in the drawer, another might want them on the shelf. How can we infer these user preferences from only a handful of examples in a generalizable way? Our key insight: Summarization with LLMs is an effective way to achieve generalization in robotics. Given the generalised rules, I will then show how TidyBot then solves the long-horizon task of cleaning up a home. In the second part of my talk, I will focus on more complex long-horizon manipulation tasks that exhibit geometric dependencies between different skills in a sequence. In these tasks, the way a robot performs a certain skill will determine whether a follow-up skill in the sequence can be executed at all. I will present an approach called text2motion that utilises LLMs for task planning without the need for defining complex symbolic domains. And I will show how we can verify whether the plan that the LLM came up with is actually feasible. The basis for this verification is a library of learned skills and an approach for sequencing these skills to resolve geometric dependencies prevalent in long-horizon tasks.

Bio: Jeannette Bohg a Professor for Robotics at Stanford University and directs the Interactive Perception and Robot Learning Lab. Her research generally explores two questions: What are the underlying principles of robust sensorimotor coordination in humans, and how we can implement these principles on robots? Research on this topic lies at the intersection of Robotics, Machine Learning and Computer Vision, and her lab focuses specifically on Robotic Grasping and Manipulation.

Title: Long-horizon manipulation: the crossroads of planning and learning

Bio: Georgia is a Full Professor for Robot Perception, Reasoning and Interaction Learning at the Computer Science Department of the Technical University of Darmstadt and Hessian.AI. Before that, she was an Assistant Professor since February 2022, and Independent Research Group Leader from March 2021, after getting the renowned Emmy Noether Programme (ENP) fund of the German Research Foundation (DFG). This project was awarded within the ENP Artificial Intelligence call of the DFG – only 9 out of 91 proposals were selected for funding. It enables outstanding young scientists to qualify for a university professorship by independently leading a junior research group over six years.

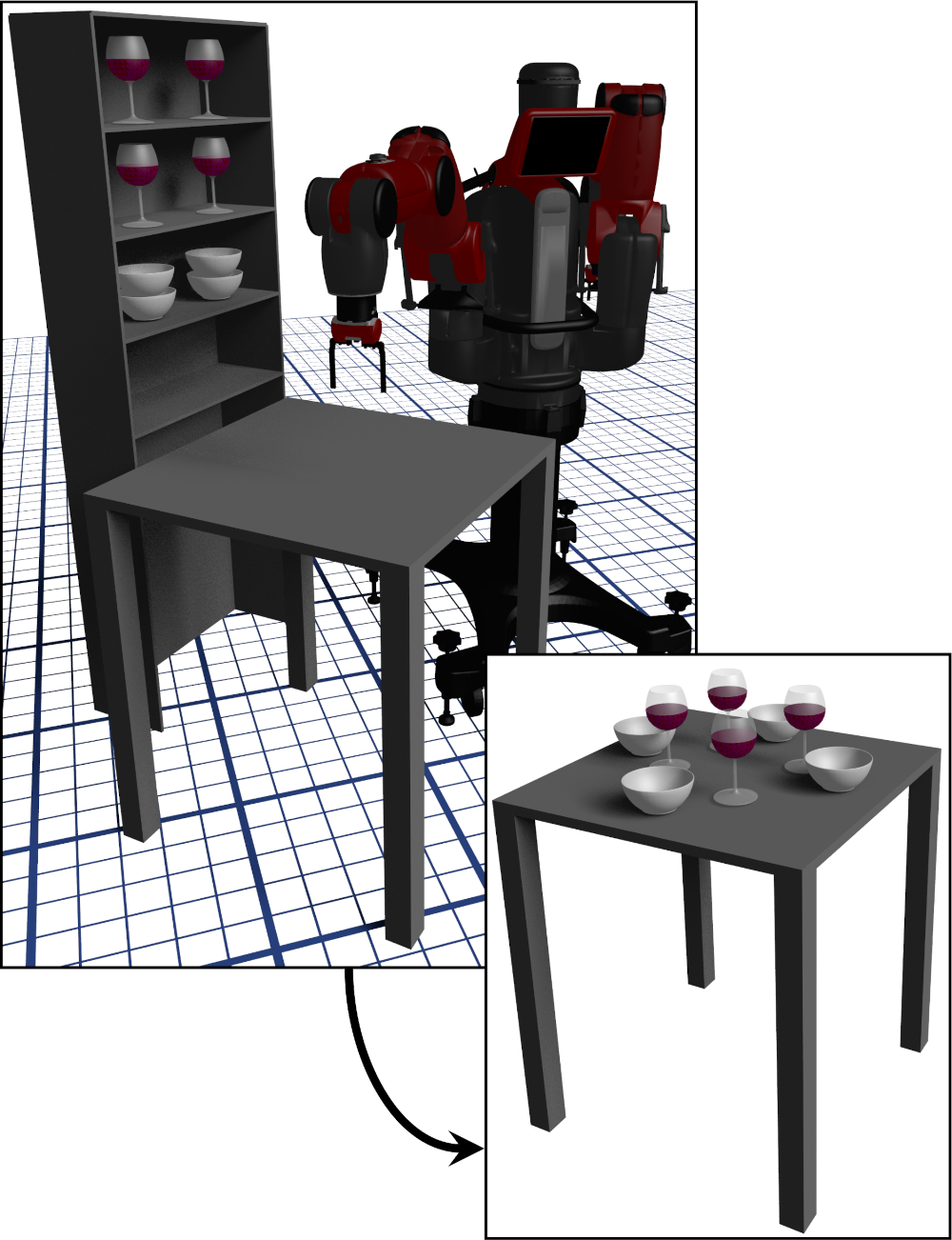

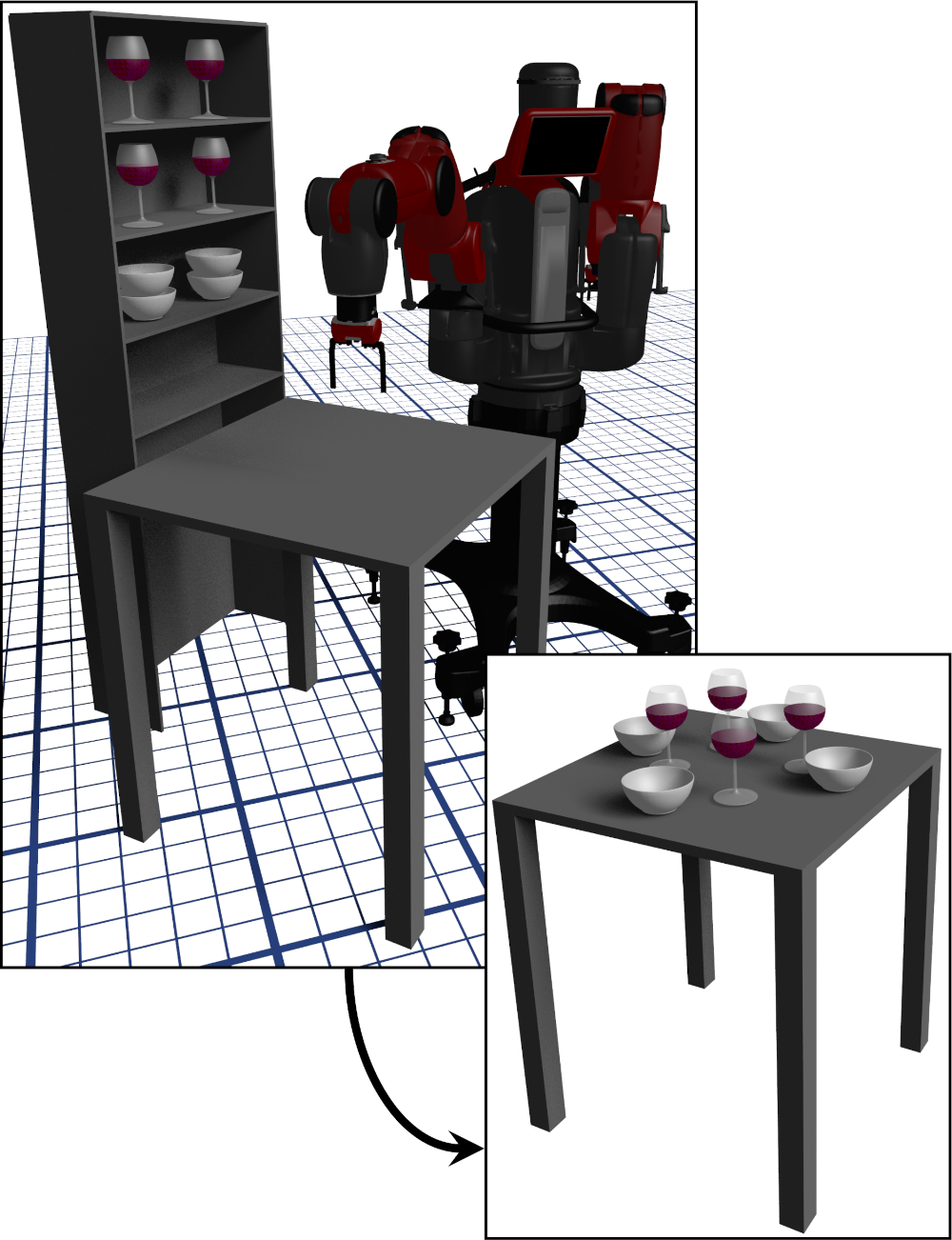

In her research group, iROSA, Dr. Chalvatzaki, and her new team will research the topic of "Robot Learning of Mobile Manipulation for Assistive Robotics." Dr. Chalvatzaki proposes new methods at the intersection of machine learning and classical robotics, taking the research for embodied AI robotic assistants one step further. The research in iROSA proposes novel methods for combined planning and learning to enable mobile manipulator robots to solve complex tasks in house-like environments, with the human-in-the-loop of the interaction process.

From October 2019, she was a Postodoctoral researcher at the Intelligent Autonous Systems group, advised by Jan Peters. Dr. Chalvatzaki completed her Ph.D. studies in 2019 at the Intelligent Robotics and Automation Lab at the Electrical and Computer Engineering School of the National Technical University of Athens, Greece, with her thesis "Human-Centered Modeling for Assistive Robotics: Stochastic Estimation and Robot Learning in Decision Making."

Title: Reintegrating AI: Skills, Symbols, and the Sensorimotor Dilemma

Abstract: I will address the question of how a robot should learn an abstract, task-specific representation of an environment. I will present a constructivist approach, where the computation the representation is required to support - here, planning using a given set of motor skills - is precisely defined, and then its properties are used to build the representation so that it is capable of doing so by construction. The result is a formal link between the skills available to a robot and the symbols it should use to plan with them. I will present an example of a robot autonomously learning a (sound and complete) abstract representation directly from sensorimotor data, and then using it to plan. I will also discuss ongoing work on making the resulting abstractions both practical to learn and portable across tasks.

Bio: George Konidaris is an Associate Professor of Computer Science and director of the Intelligent Robot Lab at Brown, which forms part of bigAI (Brown Integrative, General AI). His research is driven by the overarching scientific goal of understanding the fundamental computational processes that generate intelligence, and using them to design a generally-intelligent robot.

Dr. Konidaris is also the co-founder of two technology startups. He co-founded, and serves as the Chief Roboticist of, Realtime Robotics, a startup based on our research on robot motion planning, and that aims to make robotic automation simpler, better, and faster. He also co-founded Lelapa AI, a commercial AI research lab focused on technology by and for Africans, and based in Johannesburg, South Africa.

Title: Planning under Uncertainty: Revisiting the Curse of History

Bio: Hanna Kurniawati is a Professor at the School of Computing, Australian National Univeristy (ANU), and the SmartSat CRC Professorial Chair for System Autonomy, Intelligence, and Decision Making. Her research interests span planning under uncertainty, robotics, robot motion planning, integrated planning and learning, and computational geometry applications. Specifically, she focuses on algorithms to enable robust decision theory to become practical software tools, with applications in robotics and the assurance of autonomous systems.

Dr. Kurniawati is the ANU Node Lead and the Planning & Control theme lead for the Australian Robotics Inspection and Asset Management (ARIAM) Hub. At ANU, she founded the Robot Decision Making group and was a deputy lead of the interdisciplinary research project Humanising Machine Intelligence. She earned a BSc in Computer Science from the University of Indonesia and a PhD in Computer Science for work in Robot Motion Planning from National University of Singapore. After her PhD, she worked as a Postdoctoral Associate, and then a Research Scientist at the Singapore-MIT Alliance for Research and Technology, MIT. She was a faculty member at the University of Queensland School of ITEE and moved to ANU in 2019, as an ANU and CS Futures Fellow.

Title: From Click to Delivery: Challenges and Opportunities in Task and Motion Planning at Amazon

Abstract: Amazon tackles the problem of coordinating a heterogeneous fleet of hundreds of thousands of robots to go from a website click to a delivery at your door. We leverage problem decomposition to solve this huge optimization problem. In this talk I will provide an overview of the underlying motion, allocation, perception, and manipulation sub-problems, and discuss some of the challenges and opportunities connected to their integration.

Bio: Federico Pecora is a Senior Manager, Applied Science at Amazon Robotics. His research lies at the intersection of Artificial Intelligence and Robotics and focuses on combining methods from these two fields to develop solutions with high impact in society and industry. He believes very much in the alternation of application-driven and basic research: problems in concrete application settings often pose the most interesting and general research challenges, and addressing these challenges leads to better solutions for real applications.

Before joining Amazon, Dr. Pecora founded and was Head of the Multi-Robot Planning and Control Lab at Örebro University. He also led several large applied research projects co-funded by the industry, including Atlas Copco/Epiroc, Volvo Construction Equipment, Volvo Trucks, Kollmorgen, Husqvarna, Scania, and Bosch.

Title: TAMP at operational scale: do operations actually need motion planning?

Abstract: At scale, TAMP is actually operations planning. In order for operations planning to work, we must aggressively abstract away details to get to a computable result; in many ways, motion planning at the level of robot controls can seem irrelevant when considering complex operations at large scales. We will look at ways in which motion planning is and is not an important part of planning complex operations, and consider some of the key challenges in operations that may inspire future TAMP problem formulations and help to understand the role and requirements of motion planning.

Bio: Ethan Stump is a researcher within the U.S. Army Research Laboratory's Computational and Information Sciences Directorate, where he works on machine learning applied to robotics and control with a focus on ground robot navigation and human-guided reinforcement learning. He received the Ph.D. and M.S. degrees from the University of Pennsylvania, Philadelphia, and the B.S. degree from the Arizona State University, Tempe, all in mechanical engineering. Dr. Stump is a government lead in intelligence for the ARL Robotics Collaborative Technology Alliance and in distributed intelligence for the ARL Distributed Collaborative Intelligent Systems Technology (DCIST) Collaborative Research Alliance. During his time at ARL, he was worked on diverse robotics-related topics including implementing mapping and navigation technologies to enable baseline autonomous capabilities for teams of ground robots and developing controller synthesis for managing the deployment of multi-robot teams to perform repeating tasks such as persistent surveillance by tying them formal task specifications.

| 09:00-09:30 | Workshop Introduction |

| 09:30-10:00 | Federico Pecora: From Click to Delivery: Challenges and Opportunities in Task and Motion Planning at Amazon |

| 10:00-10:30 | Jeannette Bohg: Large Language Models for Solving Long-Horizon Manipulation Problems |

| 10:30-11:00 | Coffee Break |

| 11:00-11:30 | Barrett Ames |

| 11:30-12:00 | Hanna Kurniawati: Planning under Uncertainty: Revisiting the Curse of History |

| 12:00-12:30 | Poster Lightning Talks |

| 12:30-13:30 | Lunch |

| 13:30-14:30 | Poster Session |

| 14:30-15:00 | Georgia Chalvatzaki: Long-horizon manipulation: the crossroads of planning and learning |

| 15:00-15:30 | Coffee break |

| 15:30-16:00 | George Konidaris: Reintegrating AI: Skills, Symbols, and the Sensorimotor Dilemma |

| 16:00-16:30 | Ethan Stump: TAMP at operational scale: do operations actually need motion planning? |

| 16:30-17:30 | Panel Discussion and Wrap-Up |